I. Introduction: Why Memesis?

Memesis is a 3D virtual reality environment with a biofeedback interface designed for use in CAVEs (computer automated virtual environments). It is an experiment in interactive narrative designed to test certain theories of presence and immersion in the environment and transparency or immediacy in the interface. The biofeedback interface requires a shift in narrative strategies. The aim is to provide the user with a greater sense of presence than possible with more more traditional VRE design.

How is narrative changed when the user not only chooses consciously how to navigate through a hypermedia text, but also subconsciously? The environment of Memesis is designed to resemble a haunted house that collects information about the users phobias and deep-seated psychological fears in order to provide a more thrilling experience. The emphasis is on the development an interface designed to read subconscious input from the user as well as user choices ("point and clicks"). If successful, additional VREs with the same interface are planned to carry the interactive narrative and engagement research even further.

Memesis is designed to be short experience (15 to 20 minutes) in an environment that collects biofeedback data from the user and incorporates that data back into the environment. There are three elements to this task: a new approach to interactive narrative; a new way of thinking about and designing the relationship between the user and the interactive environment; and designing a VR biofeedback interface to make the latter two possible. If successful, in the long term the project should add important insights to current research on presence, transparency or immediacy, and engagement in virtual reality and computer games. In addition the human subject study that will be conducted in support of the project should yield new insights on the differences in engagement reflected in conscious and subconscious user choices. If the project is successful in CAVE environments future work is planned for a console game delivery system, which could have broad applications for computer games in general and interactive learning design in particular.

II. History of project to date

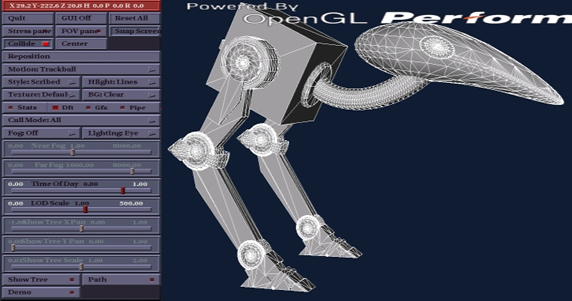

Alison McMahan first designed the Memesis experiment while Associate Professor of Film and New Media Studies at the University of Amsterdam in the Spring of 2001. She was awarded the two year Mellon post-doctoral fellowship for it in April of 2001 by Vassar College, for the period January 2002-Dec 2003. She first presented the project to the academic community in the form of a poster at the COSIGN 2001 conference at the CWI in Amsterdam, the Netherlands, and gave a report of work-in-progress at the 6th World Multiconference on Systemics, Cybernetics and Informatics ( SCI 2002) Orlando, July 14-18, 2002. In June of 2002 she was awarded two Academic Research grants from Vassar College , one for biofeedback equipment and one for a student to work on programming during the summer of 2002. She also received an Undergraduate Research Summer Institute ( URSI ) grant for the summer of 2002. As a result of these grants Matt Huntington, a Vassar Junior majoring in computer science, was able to spend the summer of 2002 learning MAYA and completing several important 3D elements of the Memesis environment, including the bird-bot "spirit guide" for the environment which functions as a catalyst and "help menu" in the environment. As a result of the second Academic Research grant, she was able to hire Michael Palmer, Vassar senior in History and a consultant at the Media Cloisters, to put together an initial collection of sound effects and research the compatibility between Maya , Director 8.5, and VRML (virtual reality markup language). Once awarded the Academic Research Grant for the biofeedback readers McMahan researched the best type of reader to be had for the budget, in consultation with Greg-Priest Dorman, Systems Administrator in the Vassar Computer Science Department and an expert in wearable computing.

In addition to the above-named grants McMahan submitted an Institutional Review Board (IRB) proposal for a human subject study relevant to Memesis. This proposal was approved in September 2003, and the study took place in the fall of 2003. Rebecca Tortell, a Vassar psychology student, assisted with this study, involved measuring galvanic skin response and heart rate response to selected video clips.

The project became an official Cloisters project in October of 2002, (approved by Cloisters Steering Committee which at that time included Faculty Directors Michael Joyce and Colleen Cohen and Cloisters curator Rain Breaw, among others) at which point several Cloisters student employees were assigned to the project: Lauren Klein and Jason Fotinatos, with assistance from Matt Huntington. All three of these students continued to build models for the Memesis environment using Maya 4.5. Matt and Jason graduated in June of 2003 and Tom Gemelli joined the project at the same time. In September of 2003 another Cloisters student, Amanda Tomaszczuk joined the team. Amanda edited video cut-scenes for the Haunted House scenario using Final Cut Pro.

Various volunteers have also worked on the project from time to time, specifically Saif Ansari, a non-Vassar student who modeled the Haunted Mansion environment and the furniture to go into it, and Vivek Mahapatra, a Vassar student who built the model of the ship for the underwater exploration scenario.

An additional URSI grant awarded for the summer of 2003 enabled McMahan to hire Jason Teirstein work on the next couple steps in the pipeline: converting the models to inventor files, re-texturing the models in Performer, and finally adding behaviors to the environment in Ygdrasil as well as sound files provided by Tom Gemelli, who worked on the later end of the pipeline as well as providing basic Maya models. Teirstein continues to work on implementing models and behaviours in Ygdrasil for the Fall of 2003 as McMahan's student assistant. An HHMI grant enabled us to purchase two copies of Nugraf (Polytrans) software with the Maya plug-in package which made the process of converting models for use in Ygdrasil much more streamlined.

During the summer of 2003 McMahan served as coordinator for the Summer Media Institute for the second year in a row. As part of her duties she arranged for Dave Pape, the computer scientist who wrote the CAVElib software and Ygdrasil, to make three extended trips to consult with Vassar in the construction of a "mini-CAVE" system for the Media Cloisters (see Pape's webpage, www.resumbrae.com, for information on this system). She also arranged for William Sherman from the NCSA to give a one day workshop to the Vassar community on his software, FreeVr. Both Pape and Sherman worked on upgrading the code for the Vassar mini-CAVE system, which runs Suse 8.2, FreeVR for the tracking (Vassar uses the Ascension Flock of Birds), Performer and Ygdrasil. The system uses stereo frontal projection which gives a small group of people something similar to a CAVE experience and also is useful for a classroom setting.

McMahan made every effort to make her research known to the Vassar community, specifically by putting relevant information on the Vassar website, giving an URSI lecture on July 10, 2002 , and another in July of 2003, where she described her work, and giving a research presentation to incoming Vassar Freshmen on August 27, 2002 . The mini-CAVE was setup for the URSI symposium on September 18, 2003 , and a preliminary demo was given to students the week before. The final Vassar Memesis demonstration took place on Dec. 4, 2003.

McMahan has also presented her VR research at the following international conferences: VSMM2003: Hybrid Reality: Art, Technology and the Human Factor, Montreal, October 15-17, 2003; 6th World Multiconference on Systemics, Cybernetics and Informatics ( SCI 2002) Orlando, July 14-18, 2002; the COSIGN 2001 Conference, September 2001, Amsterdam, The Netherlands. She has given lectures on her work at Georgia Tech and the Blekinge Tekniska Högskola in Kalskrona, Sweden. In addition she published an article in the Video Game Reader edited by Mark Wolr and Bernard Perron, anthology published by Routledge in 2003.

III. Designing for presence in Memesis

A. Sources for inpsiration

Memesis inspired by other VR environments, such as the work of Dave Pape (Crayoland) and Josephine Anstey (The Thing Growing and The Trial the Trail) and Char Davies (Osmose and Ephemere), Diane Gromala, Diane Piepol (Flatworld), Jackie Morie (The Memory Stairs and DarkCon). Also, Clive Fencott's work on virtual reality design at www.fencott.com/clive

Certain computer games were great sources of inspiration, such as the PC games Myst, Riven, and Myst Exile, the Silent Hillseries, Syberia and Black and White, Sega Games such as Ecco the Dolphin and Shenmue, and PS2 games like Primal.

This project was originally inspired by projects that apply VR in the treatment of phobias, such as Larry Hodges' designs for virtual environments to treat patients with fear of heights and fear of spiders at theuniversity of North Carolina,[1] Chris Shaw's virtual reality environment for training fireman at Georgia Tech,[2] and various others.[3] [4] The design of Memesis is partly inspired by the design of these virtual environments for clinical treatment and training. The difference is that while clinicians like Larry Hodges wish to help their patients overcome their phobias by exposing them to spiders or heights in a virtual environment, we wish to titillate our users with "shock horror" experiences based on their phobic reactions to events and situations in our environment.

B. The experience of presence

The term "presence" is a rather slippery one and is often used interchangeably with equally slippery terms such as "immersion" and "engagement." To elaborate a coherent set of aesthetic criteria that my team[5] and I could work from, I carried out a survey of the literature on presence, especially as defined for use in clinical VR.[6] The most useful work in the field is that of Matthew Lombard and Theresa Ditton,[7] who defined presence as "the artificial sense"... a user has in a virtual environment that the environment is unmediated."[8] [9] They surveyed the literature on presence and found that other researchers had conceptualized presence as the result of a combination of one or all of six different factors. Their summary indicated that an increased sense of presence can result from a combination of all or some of the following factors: quality of social interaction, realism in the environment (graphics, sound, etc.), from the effect of "transportation", from the degree of immersiveness generated by the interface, from the user's ability to accomplish significant actions within the environment and the social impact of what occurs in the environment, and from users responding to the computer itself as an intelligent, social agent. Lombard and Ditton's elements of presence are reminiscent of Michael Heim's initial definition and categorization, in his essay, "The Essence of VR".[10] Heim defines VR as "an event or entity that is real in effect but not in fact... The public knows VR as a synthetic technology combining 3-D video, audio and other sensory components to achieve a sense of immersion in an interactive, computer-generated environment." According to Heim, there are seven elements of VR: Simulation, Interaction, Artificiality, Immersion, Telepresence, Full-Body Immersion, and Networked Communications. However, for scientists, especially cognitive scientists and therapists using VR as a treatment tool, Lombard and Ditton's definitions make up the standard. I have slightly reformulated their six criteria for presence in order to eliminate some confusion in the terminology and to make the criteria more applicable to entertainment applications[11], such as 3-D computer games and VR environments. Here is the new formulation of the Six Dimensions of presence:

- Quality of social interaction

- Quality of realism in the environment (graphics, sound, etc)

- From the effect of teleportation or telepresence

- From the degree of immersiveness generated by the interface

- From the user's ability to accomplish significant actions within the environment

- From the social impact of what occurs in the environment

Memesis is designed to maximize the sensation of presence through the application of as many of these criteria as possible. The first decision was to design Memesis for a CAVE because this answers to criteria #2 and criteria #4: the CAVE is very immersive, as there are stereoscopic projections on three walls and the floor (6-wall CAVEs also have the ceiling and the back wall). Projections are life-size, there is surround sound, graphics can be photorealistic (although we have not chosen to go that route), and because the user is wearing three sensors the environment can be maximally responsive to the user's movements and changes in position and eye-line. CAVEs also enable people from other CAVEs to network with each other, however we are not building a networked application for our first prototype (the next version of Memesis will be for two people). So in this prototype we are not even trying to satisfy criteria #3.

C. The "gameplay"

The quality of social interaction in Memesis is similar to that of a typical 3-D single player computer game, such as Silent Hill, Myst, Syberia or Primal. The user moves through the environment, either walking, or traveling in a vehicle such as a swinging basket or a boat. As in those games, the world is designed fairly realistically, with paths, walls, doors and windows giving the user a sense of where to go and how to get there. Like Myst, the world of Memesis is fairly empty of occupants, except for the Bird-bot, who is the guide and companion in the game. The user realizes as gameplay progresses that though the Bird-bot will always lead her to where the next interesting adventure is, the Bird-bot's advice on what to do next, or what choice to make in a particular situation, is not always the best. The user's feelings of horror evoked by the environment should, for the most part, be fixated on the Bird-bot, for example, feelings of anger that the Bird-bot cannot be trusted, and so on. The Bird-bot is unique in the game in that it can travel all over the environment with the user, it remembers where the user has been, and it stores data from the human subject study and from previous players that it can then draw upon for its interactions with the current player.

In addition to the Bird-bot, the user interacts with other characters, such as bats in a cavern, trees that grow at an exponential rate when properly

stimulated, and human characters who experience the user as a participant in their own ongoing drama. The latter are mostly in the final levels the user experiences, which are more narrative-based than the entry level. Although the characters speak, the user's sole mode of communicating is by choosing actions. This creates a limited quality of social interaction but the level is typical of 3-D computer games and familiar to most users. All of the users actions have significant effects (most of them negative, because it is a horror environment). In spite of the above limitations the potential for social impact is very high, because users have the possibility of coming face to face, and perhaps recognizing for the first time, their deepest fears.

IV. Production pipeline

A. The script

The first step in the process of production is the writing of a scenario for each level. Because the entry level has multiple areas the scripting and production of it have been modularized. Because of the biofeedback interface a transportation feature similar to teleportation is used, except that the portals are invisible. once the game has sufficient information from the player to judge what is their greatest psychological fear the player is simply transported to the final level that the game's AI thinks is most suited to them (there are six to choose from in total). This teleportation convention eliminates the need for the user to have to walk everywhere and figure out how to get places, as they would in a more traditional game. The idea is to structure the transportation around the user's emotional states. In this prototype the teleportation events are few, but if the game were to be expanded they could be numerous. This way of moving from level to level also means that the scripting is more modular; and the overall effect is more like an episodic horror film such as Seven (1995, David Fincher). Each of these "horror-modules" is linked thematically and by the ongoing presence and developing relationship with the Bird-bot.

B. Storyboards

Once a script is available storyboards are drawn. The scenarios are then revised to include specific mentions of sounds.

C. Modelling & texturing

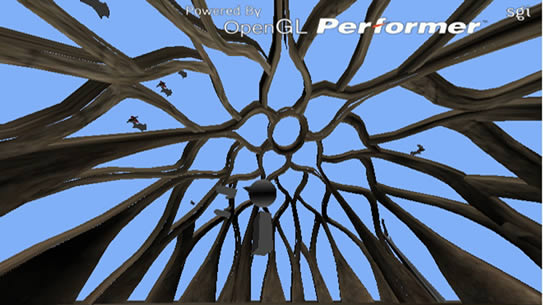

We do our modeling in Maya, and we build our models in much the same way a game modeler would, emphasizing the use of polygons. The Maya models are then converted to Inventor files (Maya 5 has a plug-in for this on the PC version, which doesn't work very well, and in October of 2003 we were finally able to purchase Polytrans/Nugraf, which should ease the process considerably). We then open the inventor files in Performer on a linux computer running Suse 8.2. Texturing is done in Performer, though now that we have Polytrans we may be able to texture in Maya.

D. Sound

Sound files are assembled and created at the same time as the models are being generated.

E. Adding behavoirs

The models are then transferred to Ygdrasil, the language written by Dave Pape, where we add triggers for behaviors, animation, and sounds.

Because we do not have a full CAVE at Vassar, we run a Flock of Birds system with three sensors (one worn on the head, one in the wand, and one held in the other hand) and a short range transmitter, with stereoscopic frontal projection onto a screen. This is not as perceptually immersive as we would wish but it works well enough for the design process.

F. The AI

Once this phase of design in production is complete a further investment in AI programming will be required: for the environment to track the user's movements, for the bird-bot and other characters to react accordingly, and for the system to interpret the user's bio-feedback data and teleport the user to the appropriate final scenario.

V. Memesis hardware and software

When this project was started, McMahan was working at the University of Amsterdam, which had their own CAVE. In order to continue with the project at Vassar, McMahan worked with Media Cloisters Curator Rain Breaw and the Cloisters Steering Committee to build a "mini-CAVE" system in consultation with Dave Pape, Assistant Professor at the University of Buffalo.

See Dave Pape's website on "Affordable VR systems" at http://resumbrae.com/info/cheapvr/casestudy.html

The first element of the mini-CAVE package is the computer: a pc with a Linux OS (in this case, Suse 8.2) installed. Vassar purchased a Flock of Birds tracking system from Ascension, Inc., with three sensors and a short-range transmitter. Twin projectors are used for frontal stereo projection onto a 70" x 70" silver dalite screen. Total cost: $25,000.

This system will be used at Vassar to research and teach interactive narrative design, virtual reality, computer science, sound design, computer graphic design, and physics and biology. The software consists of FreeVr, written by William Sherman, an open source program for the tracking; Performer, for model adjustment; and Ygdrasil, written by Dave Pape, for putting in the behaviors.

VI. Gameplay

A. Who will play this game?

Anyone over 18 who does not suffer from serious phobias.

B. Length of gameplay

The world of Memesis does not have a set time or place. It is vaguely low-tech in a post-tech world, a science-fiction or dreamlike place. Generically speaking the plots combine horror with fantasy. Because most people cannot stand to be in a CAVE environment for more than fifteen minutes (otherwise they get motion sickeness), the game has to be designed in such a way that it can be completed quickly. This is why the entry level, where the user could spend seven to ten minutes, is more interactive than the final levels, where the user could spend five to seven minutes, and is more engaged in a narrative.

C. Win and lose conditions for the game

The positive outcome is that the user actually reacts enough to the environment for the system to be able to determine their greatest fear and send them to the appropriate final scenario. Because this is a horror environment, it is impossible for the user to actually succeed at any of the tasks. The real winning situation is for the game to work well enough for the user that the game can provide them with a measure of self-knowledge.

Even if this desired outcome does not occur the user should get some pleasure out of exploring this beautifully designed world.

The game is pre-set for the user to fail at most of the tasks they attempt, so from a certain perspective the losing condition is the default.

D. Outline of a typical game

When the user first enters the environment he finds himself standing at the edge of a lake. In the distance a tower is visible on a cliff. The bird bot appears and leads the user to the rowboat. Once the user gets into the boat he realizes that a baby is crawling to the water's edge; as the boat sails away the user watches the baby fall in and drown behind him. There is nothing the user can do about this once the boat starts moving. The boat will take him on a pleasant sail around the lake then let him off on land, near the now drowned baby.

If the user chooses to walk toward the tower visible in the distance, he will have to first go through a plain with stunted bushes and trees. In the middle of this plain there is a fountain. If the user turns on the fountain the plain will be watered and the trees will grow exponentially until the user is caught in a claustrophobically deep and dark forest. With some difficulty the user will find the path to the tower.

When climbing the steps to the tower the user will find that the steps will collapse. The only way to move ahead is to get into a basket that will take the user on a wild, vertiginous ride. The user will disembark in the top tower room where there will be baby things Ð but no baby. Space in this tower room is not defined as it is elsewhere. Here lines are drawn orthographically instead of in perspective, distorted the way they would look to a child. After the user has explored for a bit he will be transported to the lotus dome, where bats will lock him in place, insult him for the various things he has done wrong, and swarm him, promising to transport him to punishment.

If the user reacts most strongly to the drowning baby, his final scenario will be the "Haunted Mansion" scenario. If he reacts most strongly to the bat's insults, or to being trapped in the forest, he will end up in the "shipwreck" scenario at the bottom of the lake. Alarm at being in a non-perspectival space will get him to the Torture Hall scenario.

At the end of each scenario the bird-bot will give the user a note telling him or her what his greatest fear and phobia is, according to their gameplay.

VII. Plans for the future

The prototype for Memesis is designed for use in CAVEs because we wanted to have maximum immersion in order to test the use of the biofeedback interface. The current design is single player. If, once completed, the experiment is successful, plans for the future include extending the basic horror environment and making it possible for two people to play it over a network. At the same time we would develop the single-player game for a console setup, to see if the biofeedback interface would work at the console level. The latter step will require re-thinking of immersion and transparency issues, but enables new applications such as force-feedback. Plans for future games include a romantic comedy played over a network for two people, among others.

VIII. Conclusion

Memesis started as an experiment in transparent interface design, but its biofeedback interface has led us to reconsider how interactive narrative works in virtual environments in general and with a biofeedback interface in particular. Our solution was to create a more episodic, or modularized narrative that emphasizes interactive experiences in more narrative based (and therefore more passive) experiences at the final levels. However the choice of second level is determined by the user's emotional state, and may aid users in gaining self-knowledge about their fears and phobias.

-----------------------

Footnotes:

[1] Hodges, L., Rothbaum, B., Kooper, R, Opdyke, D., Meyer, T., North, M., de Graff, J. and Williford, J. Virtual environments for treating fear of heights. IEEE Computer 28, 7, (Jul. 1995). 27-34.

[2] http://www.gvu.gatech.edu/virtual/

[3] MŸhlberger, A., Herrmann, M.J., Wiedemann, G., Ellgring, H., & Pauli, P. (2001). Repeated exposure of flight phobics to flights in virtual reality. Behaviour Research and Therapy 39 1033-1050.

[4] North, M., North, S. and Coble, J. Effectiveness of virtual environment desensitization in the treatment of agoraphobia. Presence: Teleoperators and Virtual Environments 5, 3, (March 1996), 346-352

[5] Various students at Vassar College have worked on this project since its inception. They are: Matt Huntington, Jason Fotinatos, Phillip Clarke, Lauren Klein, Tom Gemelli, Amanda Tomasczck, Jason Teirstein and David Sturman. Volunteer contributors include Saif Ansari, Nick Nigro and his able assistant Dan, and Ben Horst. Vassar Faculty and staff who have lent their time and expertise to the project include Rain Breaw, Greg Priest-Dorman, Ken Livingston, John Long and Tom Ellman. This project would not have been possible without the support of the Media Cloisters at Vassar College and Michael Joyce and Colleen Cohen. I am also grateful for the support of Dean of the Faculty Barbara Page, Chair of the English Department Robert Demaria and Amanda Thornton, as well as David McMahan and Warren Buckland. Dave Pape (www.resumbrae.com) and William Sherman (www.ncsa.uiuc.edu/VR/People/wsherman.html) are consultants on the project.

[6] McMahan, Alison "Immersion, Engagement and Presence: A Method for Analyzing 3-D Video Games" in Video Game Theory, edited by Mark P. Wolf and Bernard Perron, Routledge, August 2003, pp. 60-88.

[7] Lombard, M., Theresa B. Ditton, et. Al, "Measuring presence: a literature-based approach to the development of a standardized paper-and-pencil instrument." Project Abstract Submitted for Presentation at Presence 2000: The Third International Workshop on Presence, http:www.presenceresearch.org/presence2000.html.

[8] http://astro.temple.edu/~lombard/research.htm

[9] Jay David Bolter and Richard Grusin use immediacy to define a similar concept, in their book Remediation (Cambridge, MA: MIT Press, [1999] 2000):

Immediacy (or transparent immediacy): A style of visual representation whose goal is to make the viewer forget the presence of the medium (canvas, photographic film, cinema, and so on) and believe that he is in the presence of the object of representation. One of the two strategies of remediation; its opposite is hypermediacy, "A style of visual representation whose goal is to remind the viewer of the medium. One of the two strategies of remediation" (272-73).

[10] Originally published in Michael Heim, The Metaphysics of Virtual Reality (New York: Oxford University Press, 1993).

[11] By simulation, Heim means the trend in certain kinds of VR applications that try to approach photo-realism, using graphics or photographs, and also use surround-sound with an aim towards "realism." Heim points out that we think of any interaction mediated by a machine as a virtual one (including phone calls with people we never meet). By Artificiality Heim means what other scholars such as Cubitt mean by Simulation, in other words, an environment with possibilities for action (a world) that is a human construct. This construct can be mental, like the mental-maps of Australian Aborigines, or constructed, like a 3-D VR. For Heim, Immersion refers to VR technology's goal to "cut off visual and audio sensations from the surrounding world and replaces them with computer-generated sensations. Full-Body Immersion, which Heim also called "Projection VR", following Myron Krueger, is defined as "Interactive Environments where the user moves without encumbering gear" (such as a Head Mount Display) Projection VR requires more suspension of disbelief on the part of the user. Heim makes the distinction between VR and telepresence: virtual reality shades into telepresence when you bring human effectiveness into a distant location - for example using robotics. For Networked Communications Heim followed the definition of Jason Lanier: a virtual world is a shared construct, a RB2 (Reality Built for Two) Communication with others in an environment is essential; on-line networked communities strongly embodies this element of VR. Heim incorporates all seven elements into a new definition of VR: "An artificial simulation can offer users an interactive experience of telepresence on a network that allows users to feel immersed in a communications environment."